|

I am Shreyas, a first year MSML student at CMU. Before joining graduate school, I was a quantitative researcher at ArthAlpha, an early stage hedge fund where I applied statistics and machine learning to model the financial markets. Even more previously, I was at Microsoft India, working on automatic speech recognition for Indian languages. I majored in Mathematics and Computing at Indian Institute of Technology Kharagpur, working on probabilisitc and statistical machine learning, deep learning and a little bit of robotics. I have been fortunate enough to work under the guidance of some amazing mentors. In the past, I have worked with Prof. Buddhananda Banerjee on Statistical Changepoint Detection in Time Series for my Thesis and with Prof. Guy Van den Broeck and Prof. Yitao Liang on Tractable Probabilistic Modelling at the UCLA StarAI Lab. I have also spent some awesome time as an Applied Scientist Intern at Microsoft working on Automatic Speech Recognition and Google Summer of Code on open-sourcing machine-learning paper implementations in Julia. Outside of work I enjoy playing the keyboard, guitar, table-tennis and badminton. I am a hard core rock music fan and horror movie buff. I do get fascinated by things written well, be it beautiful poetry or well structured code. Sometimes I think (probably too deeply) and write. CV / Github / LinkedIn / Twitter / Google Scholar |

|

|

|

|

My passion for research stems from trying to answer the question: "How can we develop systems capable of efficient and robust decision making under uncertainty?". I want to answer this question on two frontiers: 1. I want to leverage cutting-edge reinforcement-learning techniques to allow for sample-efficient learning and decision making under uncertainty 2. I want to improve robustness of machine learning systems especially when it comes to distributions shifts and out-of-distribution generalization to ensure that such systems can reason about when they go wrong and correct themselves over time To this end, I aim to bring insights from a statistical and probabilistic viewpoint to build systems that work in the real world. Tools from Probability Theory, Statistics and Bayesian Analysis can help tremendously in this endeavour. As a broader goal, I eventually want to use the developed systems to develop real-world products in the domains of speech, natural-language or healthcare as an attempt to help improve the lives of people in society. |

|

Guide: Prof. Buddhananda Banerjee, IIT Kharagpur Thesis / Slides Changepoint testing is the detection of an abrupt change in the distribution of a time series. Change in mean detection is generally posed as a hypothesis testing problem. Self-Normalizing statistics aid such a detection by being adaptive to the presence and location of a changepoint. We propose such a statistic that acheives sharper power rise compared to previous approahces on deviation from the null-hypothesis. With simulation studies, we were able to get a sharper rise in power on deviation from null hypothesis compared to all surveyed approaches, indicating the enhanced robustness of our statistic. |

|

Guide: Prof. Guy Van den Broeck and Prof. Yitao Liang, StarAI Lab, UCLA Paper / Slides / Poster / Code / TPM Workshop, UAI'21 Structured-Decomposable Probabilistic Circuits model the data by encoding different types of context-specific-independences (CSIs). One can use these CSIs as prior information for initializing the mixture components of an EM-ensemble of circuits. We propose an algorithm by partitioning the data in coherence with these CSIs and training a circuit on each of them to get a stronger initialization of an ensemble. |

|

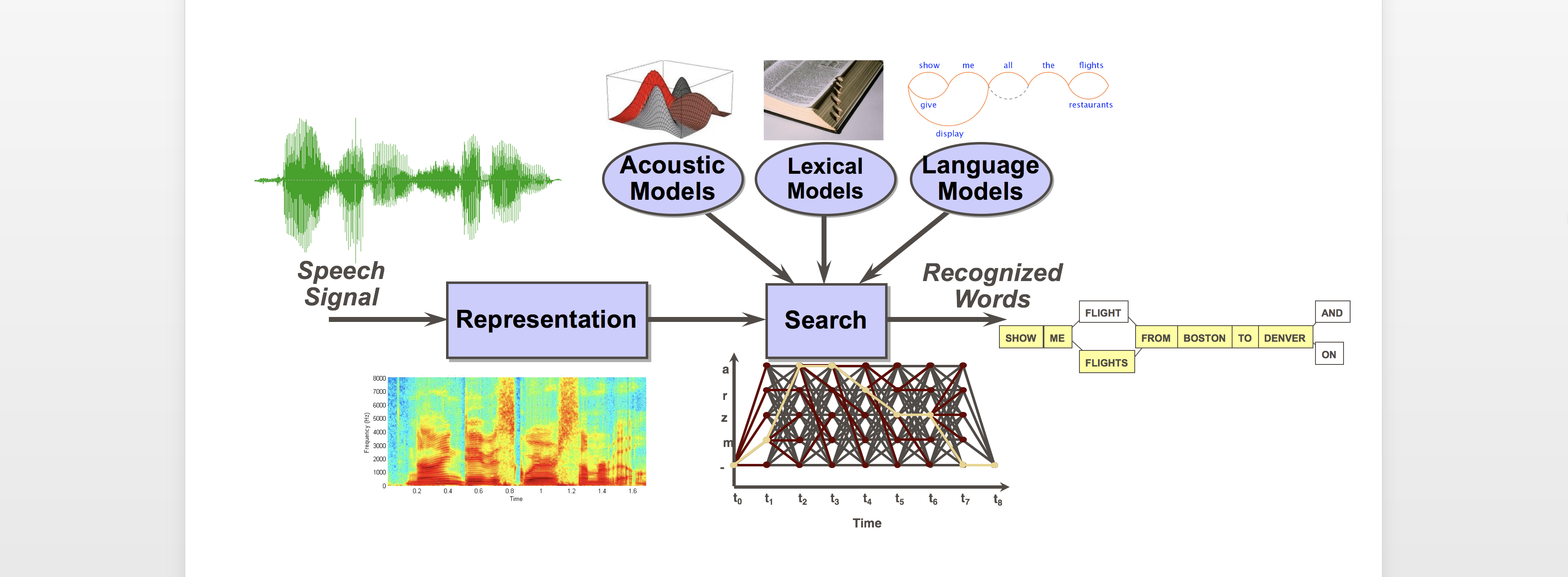

Manager: Ankur Gupta, Microsoft IDC Building speech-to-text systems for Indian languages is a challenge in robustness owing to the rich diversity in dialects, accents and pronunciations. I worked on and shipped four speech-to-text models for Indic locales, most notable of which was my work on enhacing the robustness of the Indian-English Conformer Transducer Model. With various techniques, I improved the performance of the model on entity recognition and under noisy environments, along with building scalable systems for data processing, model training and evaluation. |

|

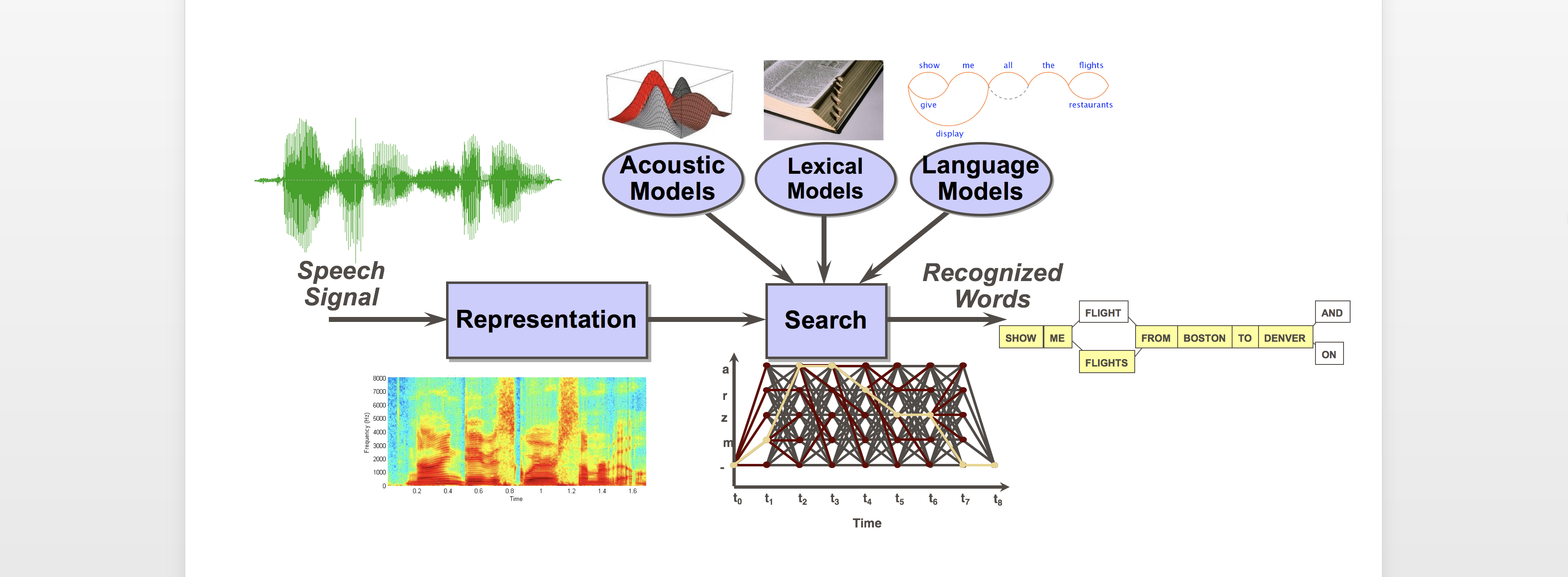

Guide: Prof. Michael Felsberg, Zahra Gharaee, Linkoping University Paper / Pattern Recognition Journal Real world road networks can be represented as a graph with intersections as nodes and roads as edges. One can use graph-based deep learning approaches to learn efficient representations of such nodes. We survey various algorithms in the literature for this task and propose a DFS based aggregation scheme to aggregate information from nodes far away from a given node. This shows enhanced performance of the representations in the downstream task of link prediction in road networks. |

|

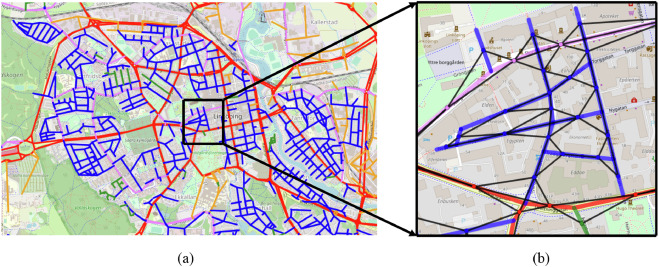

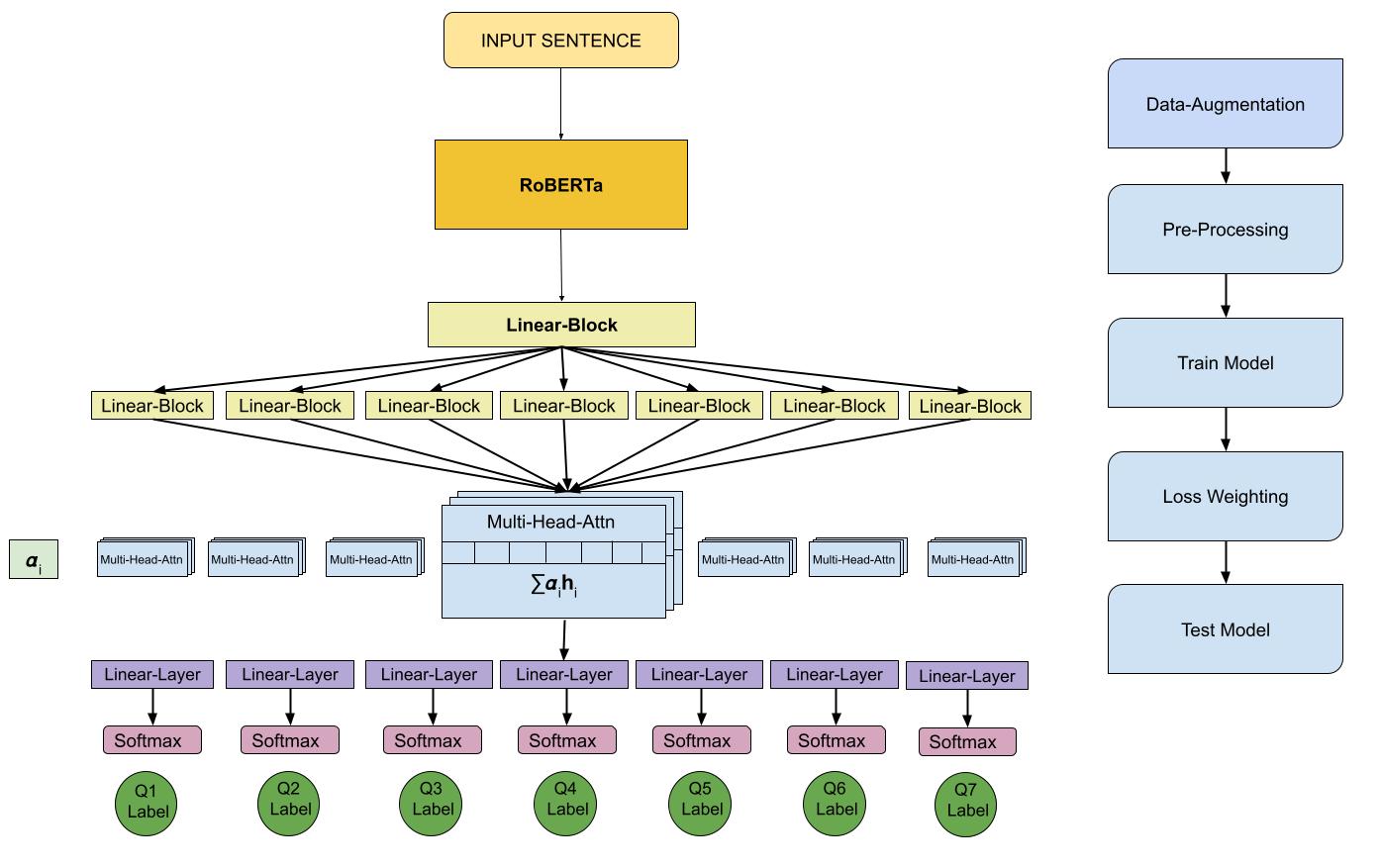

Paper / Code / Task Link / NLP4IF Workshop, NAACL 2021 Tweets during the time of a pandemic, if contain false information, can lead to spread of misinformation. The task was thus to automatically answer multiple binary questions (in yes/no) pertaining to a tweet. This problem was framed as a multi-output learning problem with answering of each question formulated as a task. Inter-task attention modules were proposed for aggregating information on top of a large-language-model. Approach resulted in runners-up position. |

|

Guide: Prof. Debashish Chakravarty, Autonomous Ground Vehicle Research Group, IIT Kharagpur Report / Video / Code / Paper on Traffic Sign Recognition / ICPRAM'19 Perception systems are crucial for robots to make sense of their surroundings. Developed an end-to-end object-detection and tracking pipeline for traffic-sign detection and classification for Indian Signs. Built a real-time road segmentation newtork for road-region segmentation. Both modules tested on electric vehicle Mahindra-e2o. |

|

|

|

Course: Advanced Machine Learning, Prof. Pabitra Mitra Report Surveyed multiple papers on calibration and uncertainty in deep neural networks. Methods like MC-Dropout, Bayes by Backprop, SGLD and p-SGLD were implemented and tested on MNIST. Uncertainty estimates of the predictions were obtained on out-of-sample images as a proof of concept. |

|

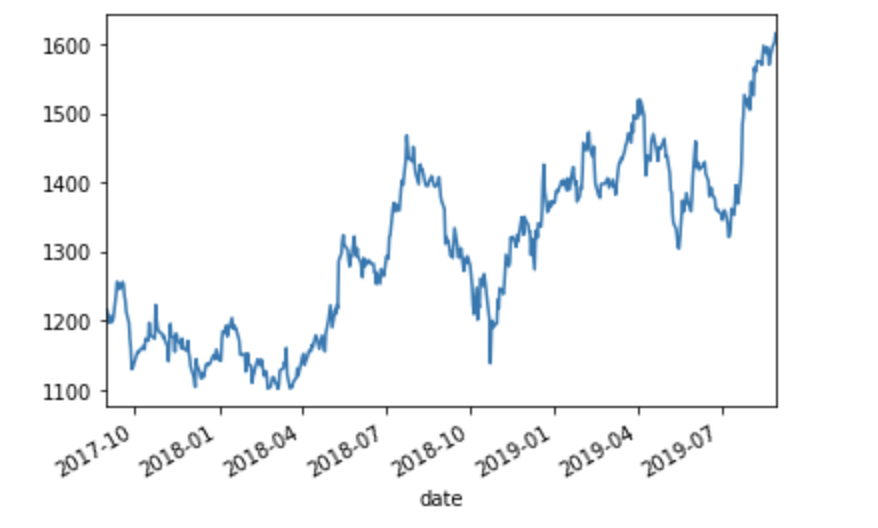

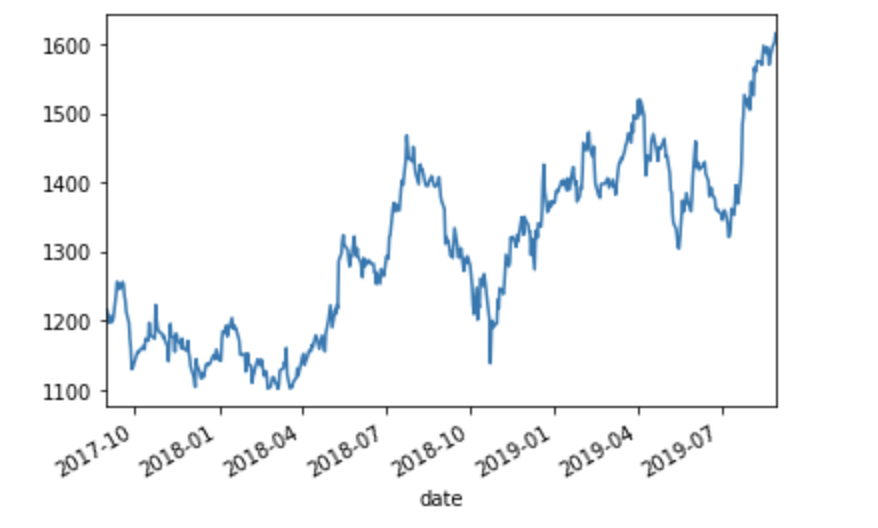

Course: Optimization Methods in Finance, Prof. Geetanjali Panda Report Built forecasting models to predict future stock prices of 30 stocks using LSTMs, Linear Regression and Support Vector Regression. Forecasts were used to select top `N` performing stocks to build a portfolio. Markowitz models were applied for portfolio optimization. Use of forecasts lead to better sharpe-ratios and returns in general on the test-set. |

|

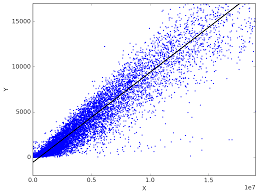

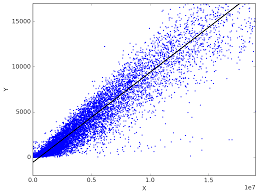

Course: Regression and Time Series, Prof. Buddhananda Banerjee Report Built statistical linear regression models to predict medical costs of patients. Improved R-squared value of the fit by implementing and analysing residuals-vs-fitted, sclae-location, residuals-vs-leverage and normal-qq plots. |

|

Blog Implemented multi-modal image2image translation GANs like pix2pix, cycleGAN SRGAN purely in The Julia Language. Wrote a library for reinforcement-learning containing PPO and TRPO implementations. Implemented Neural-Image-Captioning in a pure julia codebase. |

|

Course : Cryptography and Network Security, Guide : Prof. Sourav Mukopadhyay Code Implemented a hybrid block cipher for encrypting inputs of length 128-bits. The first 5 rounds are AES followed by 5 rounds of a custom Feistel Cipher with variable number of blocks. Finally 5 rounds of AES follow. |

|

Intelligent Ground Vehicle Competition Entry from IIT Kharagpur, Prof. Debashish Chakravarty Paper / Video Built the planning and perception stack for the Autonomous Navigation Challenge at Intelligent Ground Vehicle Competition'19, Michigan University, USA. The robot had to follow a set of GPS waypoints autonomously, remaining within lane boundaries and avoiding obstacles. Used SVMs for object detection and super-pixel clustering for lane segmentation. Entry bagged runners-up position and won the early-qualification round. |

Of course I did not design this page, |